Your Partner at Every Stage of the AI Journey

From Initial Strategy to Enterprise Scale, We Engineer Your AI Success

Whether you are starting your first AI initiative, building a dedicated platform, or optimizing a mature infrastructure, Osol provides the strategic guidance and technical expertise you need to turn potential into performance.

The Challenges We Solve

Organizations are pushing into AI, but infrastructure is often a bottleneck. We help you move forward.

GPU Scarcity or Inefficient Use

GPU Scarcity or Inefficient Use

AI teams lose time due to shared clusters, misallocation, or manual management, leading to spiraling cloud costs for AI.

Slow or Unreliable Model Deployment

Slow or Unreliable Model Deployment

Without MLOps, deploying and serving models is manual, risky, and error-prone, creating downtime and delays.

High Costs with No Visibility

High Costs with No Visibility

GPU bills skyrocket without clear tracking of compute use per team or project.

Lack of Observability in AI Workflows

Lack of Observability in AI Workflows

You can’t fix what you can’t monitor, and you can’t let model drift, latency, and behavior go unchecked.

Security, Compliance & Privacy Risks

Security, Compliance & Privacy Risks

Data pipelines, model endpoints, and integrations with systems like SCADA or ERP must meet strict internal policies and regulatory standards like ISO 27001, SOC 2, and NIST.

The Benefits of Choosing Osol

We help you go beyond notebooks to production-ready, secure, and sustainable stacks.

Faster Training & Inference with the Right Hardware

Benefit from optimized GPU/TPU setups based on your workloads and models.

Mature MLOps for Repeatable Success

Gain version control, reproducibility, and automated CI/CD for every experiment.

Compliance Built into Data & Model Flows

Align with standards like ISO 27001, SOC 2, and IEC 62443 through built-in audit trails, role-based access, encryption, and retention policies.

Lower Cloud Costs Through Smart Resource Allocation

Keep costs under control with custom dashboards, alerts, and dynamic autoscaling.

Hybrid, Multi-Cloud & Edge Flexibility

Get seamless orchestration across public clouds, on-premises data centers, and secure edge locations for complete control and low-latency processing.

Seamless Integration with Existing Platforms

Modernize your core operations by integrating AI infrastructure with your existing ERP, DCS, SCADA, and other business systems.

Reliable Operations with a Managed Support Model

Benefit from our proactive managed services, backed by guaranteed Service Level Agreements (SLAs) and clear KPIs, ensuring your AI infrastructure runs smoothly 24/7.

Our AI Infrastructure Management Services

Build the foundation for AI success with robust, scalable infrastructure designed for high-performance development and seamless deployment.

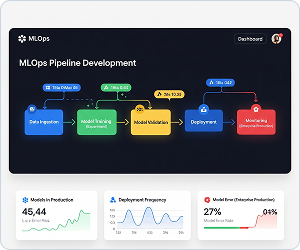

MLOps Pipeline Development

We engineer end-to-end automated pipelines that manage the entire model lifecycle, including CI/CD, drift detection, and automated versioning to ensure reliable deployments.

MLOps Pipeline Development

From Laptop to Leader. Ship AI You Can Trust.

A powerful model is useless if it's stuck in a notebook. The last mile of AI deployment is often the hardest. Osol bridges the critical gap between data science and operations, building robust, automated MLOps pipelines that move your models from experiment to enterprise-grade production reliably, rapidly, and at scale.

The Chasm Between Prototype and Production

Many organizations invest heavily in developing sophisticated AI models, only to see them falter at the deployment stage. This "last-mile problem" creates a significant gap between AI investment and business value, preventing promising models from ever having a meaningful impact. Key challenges include:

Manual Deployment Bottlenecks

Model Performance Decay (Drift)

Lack of Reproducibility and Governance

The Advantage of an AI Factory

By implementing a systematic MLOps pipeline with Osol, you transform your AI initiatives from a series of ad-hoc projects into a scalable, predictable "AI Factory" that continuously delivers business value. This enables you to:

Drastically Accelerate Time-to-Value

Ensure Production-Grade Reliability

Maintain Peak Performance with Continuous Improvement

Gain End-to-End Governance and Compliance

Our End-to-End Pipeline Engineering

Osol provides a comprehensive service to design, build, and manage the infrastructure that powers your entire machine learning lifecycle. Our expertise includes:

End-to-end automated model training, deployment, monitoring, and retraining

Continuous integration/continuous deployment (CI/CD) for ML models

Model performance tracking and drift detection

Automated rollback and version management systems

Ready to Industrialize Your AI?

Stop letting manual processes and deployment bottlenecks hold your AI initiatives back. The true ROI of machine learning is only realized when models are consistently and reliably delivering value in production. Our team of MLOps experts is ready to build the automated engine your business needs to scale.

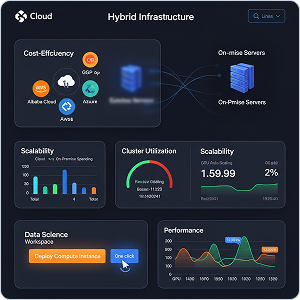

Cloud, Hybrid & Edge Infrastructure Setup

Whether multi-cloud, hybrid, or at the edge, we build the high-performance environment your AI needs with scalable GPU clusters and containerized workloads.

Cloud & On-Premise AI Infrastructure

The Power Plant for Your AI. Engineered for Performance, Built for Scale.

Osol designs and builds the high-performance engine your AI initiatives demand, whether in the cloud, on-premise, or as a hybrid solution. We architect for cost-efficiency, scalability, and speed, providing your data science teams with frictionless access to the compute power they need to innovate.

When Your Infrastructure Can't Keep Pace with Ambition

As AI models grow in complexity, many organizations find their existing infrastructure becoming the primary bottleneck to innovation. Building the right foundation is critical, but getting it right is fraught with challenges that can derail your entire AI strategy:

Spiraling Cloud Costs

Data Scientist Downtime

Security and Data Gravity

Overwhelming Technical Complexity

The Advantage of Purpose-Built Infrastructure

By partnering with Osol to engineer a tailored AI infrastructure, you move from fighting fires to fueling innovation. You gain a strategic asset designed to maximize the performance and ROI of every AI investment. This enables you to:

Optimize and Control Your AI Spend

Unleash Data Science Productivity

Achieve Strategic Flexibility and Control

Build a Scalable, Future-Proof Foundation

Our End-to-End Infrastructure Architecture

Osol provides a complete suite of services to build and manage the high-performance computing foundation for your modern AI workloads. Our process includes:

Scaling GPU clusters and specialized hardware optimization

Use of multi-cloud deployment strategies with cost optimization

Ensuring edge computing integration for low-latency AI applications

Efficiently managing containerized AI workloads with Kubernetes orchestration

Ready to Build an Engine, And Not Just Rent Servers?

Data Lake & Feature Store Engineering

We engineer robust data lakes and feature stores with automated ingestion and real-time capabilities to accelerate model development.

Data Lake & Feature Store Engineering

Your Data, Centralized. Your Features, Production-Ready.

The world's best models fail on bad data. Osol engineers the data backbone your AI strategy needs, building a centralized data lake as your single source of truth and a feature store to serve consistent, reliable data to your models. We transform your scattered data assets into a high-octane fuel source for AI innovation.

The Data Chaos Holding Your AI Hostage

Before you can build great models, you need great data. For most organizations, this is where the AI journey stalls. The underlying data ecosystem is fragmented and unprepared for the demands of machine learning, leading to critical challenges:

Data Silos and Swamps

Wasted Data Science Cycles

Training-Serving Skew

Duplicated Effort and Inconsistency

The Advantage of a Centralized Data Engine

By engineering a modern data lake and feature store with Osol, you create a standardized, efficient, and reliable data foundation that empowers your entire organization to build better AI, faster. This enables you to:

Create a Single Source of Truth

Dramatically Accelerate AI Development

Eliminate Model-Breaking Inconsistency

Foster Collaboration and Reusability

Our End-to-End Data Engineering Services

Osol provides the full spectrum of data engineering services required to build a modern data platform for AI. Our process includes:

Constructing robust data architectures for AI with automated ingestion and processing

Installing real-time data streaming and batch processing systems

Data governance and lineage tracking for compliance

Extensive use of feature engineering automation and reusability frameworks

Ready to Fuel Your AI with High-Quality Data?

AI Model Versioning & Experiment Tracking

We implement comprehensive systems for model lifecycle management that allow your team to track every experiment, compare performance, and manage versions.

AI Model Versioning & Experiment Tracking

AI Model Versioning & Experiment Tracking

The magic of AI happens through iteration, but unmanaged experiments lead to chaos, not breakthroughs. Osol brings scientific rigor to your data science workflow, implementing systems that track every experiment and version every model. We turn your R&D process into a transparent, reproducible, and governable asset that builds institutional knowledge.

The Hidden Costs of Unruly R&D

As your AI team scales, the very creativity that drives innovation can lead to untracked, irreproducible work that undermines your progress. Without a systematic approach, you face significant and costly challenges that threaten your entire AI investment:

The "Magic" Model Problem

Wasted, Duplicative Efforts

Risky "Black Box" Deployments

Compliance and Audit Failures

The Advantage of a Reproducible Workflow

By implementing a robust versioning and tracking system with Osol, you replace chaos with clarity and transform your ML development into a strategic, well-oiled machine. This allows you to:

Achieve 100% Reproducibility

Enhance Team Collaboration

De-Risk Deployments and Ensure Governance

Our End-to-End Model Lifecycle Management

Osol provides the expertise to integrate industry-leading tools and best practices directly into your data science workflow, providing a complete solution for managing your ML assets. Our services include:

Comprehensive model lifecycle management

Experiment tracking with performance comparison tools

A/B testing frameworks for model deployment

Automated model evaluation and selection systems

Ready to Turn Your Research into a Reliable Asset?

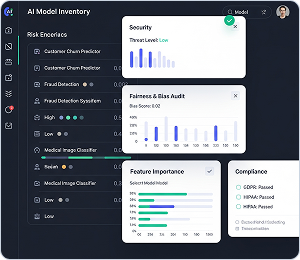

AI Security & Governance

We build security and governance directly into your infrastructure, implementing strict access controls, compliance frameworks, and tools for model explainability and bias detection.

AI Security & Governance

Trust is Not a Feature. It's the Foundation.

In the age of AI, "Does it work?" is only half the question. The other half is "Can we trust it?" Osol helps you answer with confidence, implementing robust security and governance frameworks that make your AI systems safe, fair, explainable, and compliant. We help you build AI that you can stand behind, protecting your customers and your brand.

Build Trustworthy AIThe Unseen Risks of Intelligent Systems

As AI becomes more powerful and autonomous, it introduces a new class of complex risks that can silently undermine your business, expose you to liability, and erode customer trust. Key challenges include:

The "Black Box" Dilemma

Hidden Bias and Reputational Damage

New and Sophisticated Attack Surfaces

The Shifting Regulatory Landscape

The Advantage of Responsible, Defensible AI

By proactively embedding security and governance into your AI lifecycle with Osol, you don't just mitigate risk, you build a lasting competitive advantage based on trust. This empowers you to:

Build Deep Customer and Stakeholder Trust

Protect Your Brand and Ensure Fairness

Secure Your Most Valuable AI Assets

Confidently Navigate Compliance

Our End-to-End AI Trust & Safety Services

Osol provides a comprehensive suite of services designed to embed security, ethics, and compliance into the DNA of your AI systems. Our process includes:

Implementation of security protocols and access controls for AI systems

Implementation of compliance frameworks for regulated industries

Focus on model explanation and bias detection tools

Induction of privacy-preserving AI techniques and implementation

Ready to Make Your AI Defensible, Not Just Powerful?

Why Osol for AI Infrastructure?

We don't just provide hardware or software; we architect and manage the end-to-end ecosystem that makes your AI initiatives successful.

Vendor-Agnostic, Best-Fit Solutions

As independent experts across AWS, Azure, GCP, and private cloud environments, we design the optimal, most cost-effective stack tailored to your specific models and business requirements, free from vendor bias.

Deep Expertise at the AI-Infrastructure Nexus

Our team understands the unique demands of training and inference workloads and knows how to build the resilient, automated MLOps pipelines required to support them at production scale.

Governance and Cost Control at the Core

Our solutions provide granular visibility into compute costs, enforce security policies, and create audit trails to ensure your AI operations are both efficient and enterprise-ready.

A Partnership Focused on ROI and Performance

We provide clear ROI guidance, establish measurable Key Performance Indicators (KPIs), and back our managed services with robust Service Level Agreements (SLAs) to ensure your investment delivers tangible business value.

Case Studies

E-commerce Optimization

Implemented automated model retraining pipelines, cost optimization strategies, and an A/B testing framework. The solution improved recommendation accuracy by 25% and reduced infrastructure costs by 30%.

Manufacturing Platform

Built a centralized ML platform to resolve infrastructure bottlenecks where data scientists spent 70% of their time. The solution increased data scientist productivity by 50% and standardized model deployment across 12 facilities.

Frequently Asked Questions

Should we build our AI infrastructure on the cloud or on-premises?

The best choice depends on your specific needs regarding data sovereignty, security, cost, and scalability. We conduct a thorough analysis of your workloads to recommend the right hybrid or dedicated strategy.

How do you help us optimize our use of expensive GPU resources?

We implement sophisticated scheduling, orchestration, and monitoring tools for full visibility into GPU utilization. Through right-sizing instances and automating resource allocation, we ensure you get maximum performance from every dollar spent.

We already have a DevOps team. How does Osol work with them?

We act as a specialized extension of your team. We collaborate closely with your DevOps and IT staff, introducing MLOps best practices and tools that integrate seamlessly with your existing CI/CD pipelines.

What is MLOps, and why is it critical for our infrastructure?

MLOps (Machine Learning Operations) is the practice of automating and standardizing the entire machine learning lifecycle. It's critical for making your AI development process repeatable, reliable, and auditable.

How does Osol integrate AI with our existing SCADA or ERP systems?

We design secure data pipelines and APIs that allow AI models to consume data from and send insights back to your core systems (e.g., ERP, DCS, SCADA), enabling functionalities like predictive maintenance without disrupting current operations.

Let’s Build Your AI Infrastructure

Get the foundation you need to scale AI with confidence, security, and control.

Request Infrastructure Assessment